Monitor & Diagnose With SSH: Network & Server Insights

In today's hyper-connected world, where digital infrastructure is the backbone of nearly every operation, can you afford to have your network services fail? The ability to proactively monitor your network, diagnose issues swiftly, and maintain optimal performance is not just an advantage; it's a necessity for business continuity.

The complexity of modern IT environments, encompassing everything from file transfer protocol (FTP) servers and storage area networks (SANs) to the intricate workings of Linux network components, demands a sophisticated approach to monitoring. The goal is to ensure that your organization's digital assets remain accessible, responsive, and secure at all times. This is no small feat. The constant barrage of data, the ever-present threat of cyberattacks, and the pressure to deliver uninterrupted service require a proactive and intelligent strategy for network management. And that means ensuring a toolset is in place that makes it all possible.

| Key Area | Details |

|---|---|

| Objective | Proactive network monitoring, swift issue diagnosis, and optimal performance maintenance. |

| Target Components | FTP servers, SANs, and Linux network components. |

| Core Functionality |

|

| Monitoring Metrics (SSH Monitoring Tool) |

|

| Security Features | Tracking failed login attempts. Identify potential security threats. Gain insights into user behavior. |

| Tools and Technologies |

|

| Compatibility |

|

| Agentless Monitoring | Utilizes SSH for data collection, avoiding the need for software installation on target systems (in many cases). |

| Additional Considerations | Distribution of SSH credentials. Limiting the monitoring user's capabilities. |

| SNMP Compatibility | SSH-based monitoring often designed for systems where Simple Network Management Protocol (SNMP) is not configured. |

| Data Collection Protocol | SSH (Secure Shell) |

Consider the sheer volume of data traversing your network. From the simple exchange of emails to the complex transactions of e-commerce, everything flows through your digital arteries. The stakes are high: downtime can cripple operations, impact customer satisfaction, and lead to financial losses. That is why organizations today, regardless of their size or sector, are investing in robust network monitoring solutions, as it becomes a strategic imperative.

At the heart of a strong network monitoring strategy lies the ability to diagnose network issues promptly and accurately. The ideal solution is one that provides continuous tracking of critical components such as FTP servers, storage area networks (SANs), and the underlying Linux network components. This means not just knowing that something is wrong but also understanding why it's happening and how to fix it, before it impacts the end-user experience. The solution must constantly monitor and report on response times, disk space availability, the overall health of the system, and a host of other key metrics in real time. The focus here is on providing a clear, up-to-the-minute view of your network's performance.

The ability to visualize data is equally important. Raw data alone can be overwhelming and difficult to interpret. Effective monitoring tools present the collected information in clear, easily understandable graphs and interactive dashboards. These visual representations help identify potential problems much more easily, allowing for faster troubleshooting and preventing minor issues from escalating into major outages. It is about translating complex data into actionable insights. With the proper monitoring tools, administrators are empowered to make informed decisions, anticipate problems, and keep the network running smoothly.

One of the most powerful techniques for monitoring Linux-based systems is the use of Secure Shell (SSH). This is because of its versatility. With an SSH monitoring tool, you can gather performance and system data from numerous Linux and Unix distributions, and even certain macOS X systems. It is a widely supported protocol, which allows for a consistent approach across a diverse infrastructure. The tool unlocks access to a wealth of key performance indicators (KPIs).

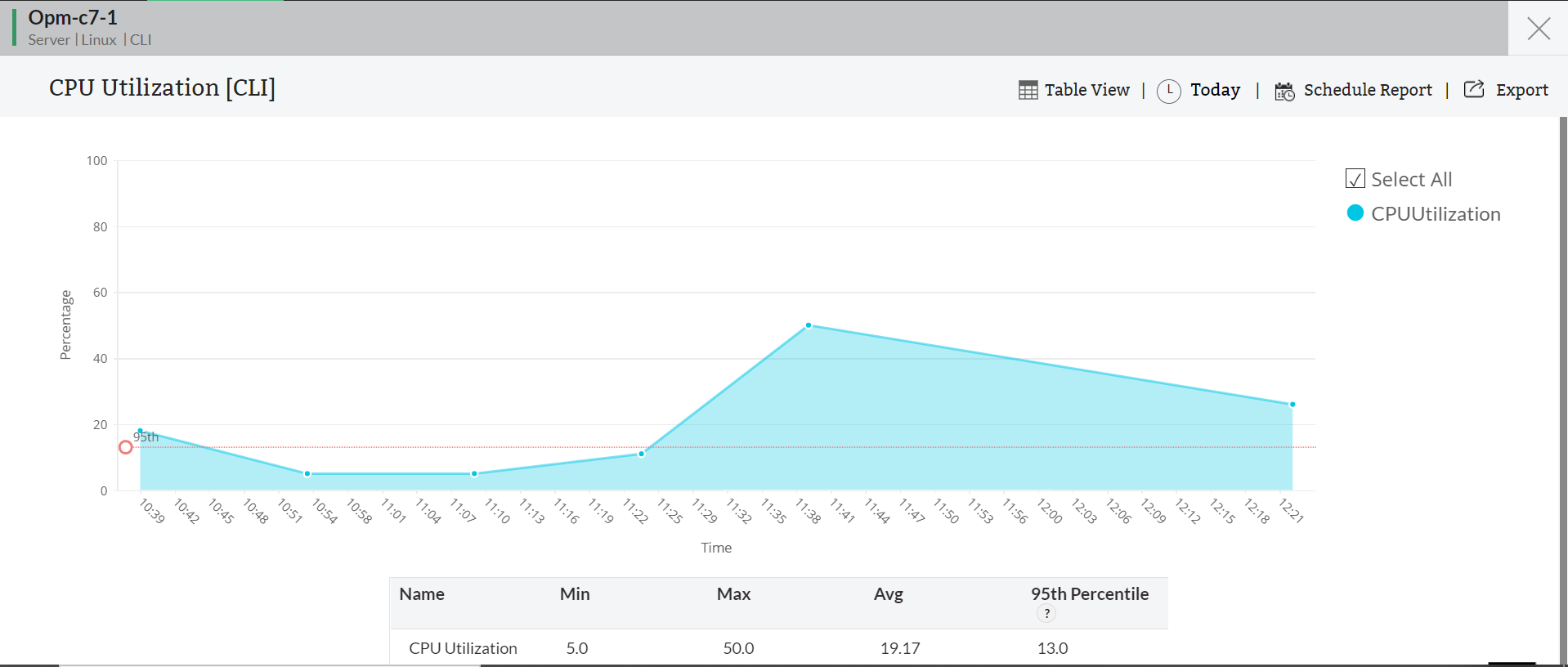

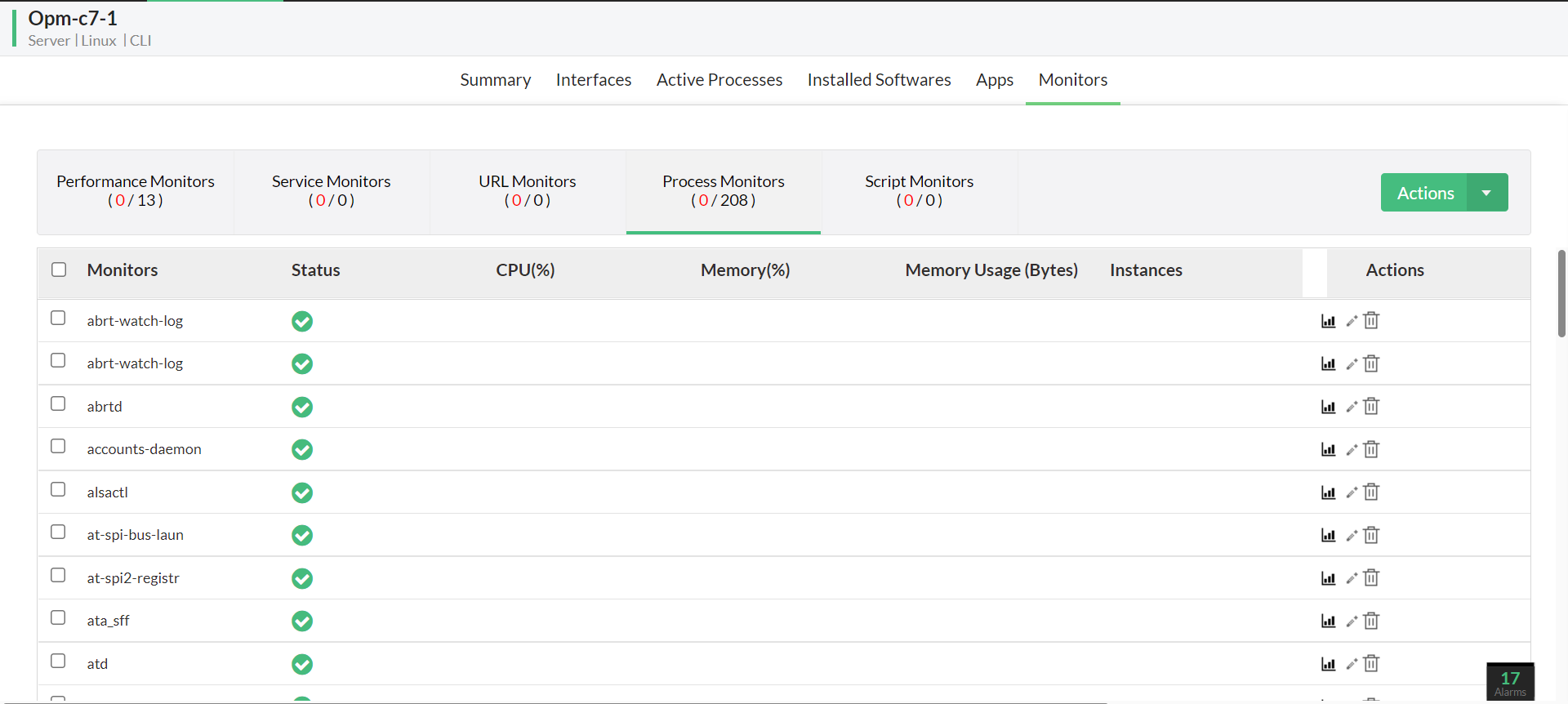

The value of real-time monitoring of Linux servers should not be underestimated. It allows you to monitor CPU utilization, track disk space utilization, and monitor memory consumption. By constantly assessing these metrics, administrators can identify bottlenecks, spot underutilized resources, and proactively address potential performance issues before they degrade the user experience. In addition to server-level monitoring, the health and performance of Linux network devices should be proactively monitored. Monitoring network devices with SSH involves checking things like interface traffic, error rates, and routing performance, which helps ensure that the network devices work without errors. In other words, this proactive monitoring gives you the insights needed to keep a network humming along in top form.

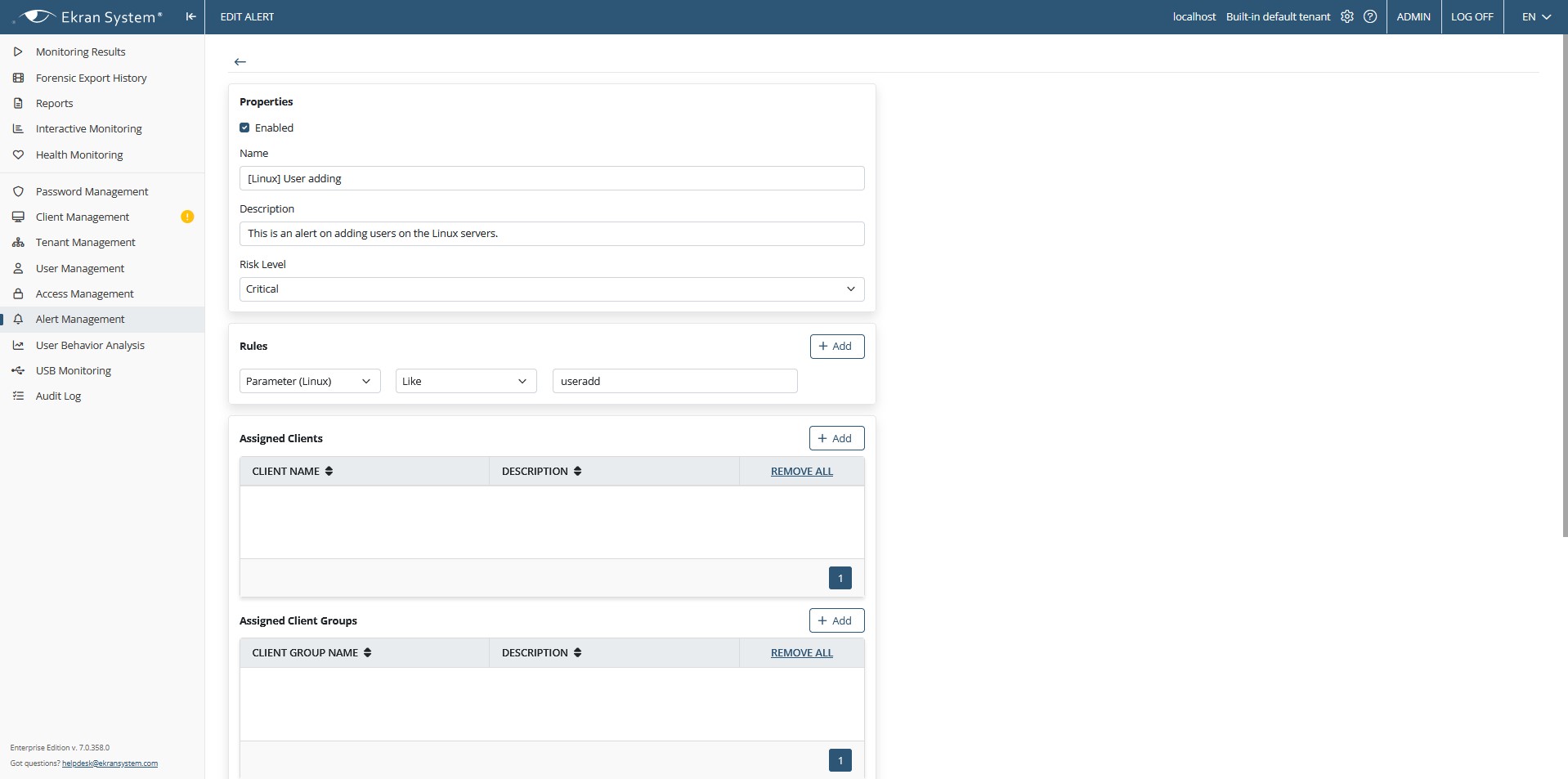

The benefits extend beyond performance monitoring. Server administrators can utilize tools like `sshlog` to track failed login attempts. These can be critical indicators of security threats, such as brute-force attacks or unauthorized access attempts. By monitoring and analyzing these attempts, administrators can proactively identify and mitigate potential vulnerabilities, safeguarding sensitive data and maintaining the integrity of the system. Monitoring with tools such as `sshlog` also allows for insights into user behavior on the server. By analyzing login patterns, access times, and command usage, administrators can gain a deeper understanding of how users interact with the system. Such information can be used to optimize system resources, improve security policies, and identify potential training needs.

LogicMonitor, for instance, provides a robust monitoring solution for Linux systems that leverages the SSH protocol. This approach allows for the collection of a wide array of metrics, including CPU, memory, and filesystem utilization, as well as throughput. This allows for a comprehensive view of system health and performance. However, it is worth noting that this type of monitoring is designed primarily for systems where SNMP (Simple Network Management Protocol) is not configured or available. In environments where SNMP is already in use, other monitoring methods might be preferred due to their extensive capabilities and pre-configured dashboards.

Another powerful and efficient option is the use of a dedicated system and server monitoring tool, like Sshsysmon. Sshsysmon offers the advantage of executing all operations over SSH without the need to install any agents on the monitored machines. This agentless approach simplifies deployment, reduces overhead, and eliminates the need for ongoing agent maintenance. It is particularly well-suited for environments where installing agents across multiple systems is impractical or undesirable. Moreover, monitoring via secure shell allows you to gather performance and system data from many Linux and Unix distributions, as well as from certain macOS X systems. If your system is supported, this monitoring technology works without any additional software on the target systems. This simplifies deployment, reduces overhead, and streamlines the entire monitoring process, making it a valuable asset in any IT environment.

A key consideration with agentless monitoring is the distribution of SSH credentials. Since the monitoring tool needs to authenticate to the target systems, you'll need to provide the necessary credentials. It is important to implement appropriate security measures, such as limiting the privileges of the monitoring user to only what is absolutely necessary. This will minimize the potential impact of a compromised account. By restricting the user's access, you can maintain a secure and efficient monitoring environment while still benefiting from the insights provided by SSH-based monitoring tools.

Let us consider some real-world applications of the concepts we have just discussed. Imagine an e-commerce company whose website experiences sudden and intermittent slowdowns during peak shopping hours. By continuously tracking the response times of their web servers and database servers, the IT team could quickly pinpoint the bottleneck. The monitoring dashboards might reveal high CPU utilization on a database server, indicating that it's struggling to keep up with the incoming requests. With this information, the team could scale up the server resources, optimize the database queries, or distribute the load across multiple servers. The goal is to ensure that the customer shopping experience is not disrupted. It ensures that transactions are processed smoothly and efficiently.

Now, consider a financial institution that relies heavily on a storage area network (SAN) for critical data storage. Regular monitoring of the SAN's disk space, read/write speeds, and overall health is paramount. Suppose the monitoring system detects that a particular storage volume is approaching its capacity limit. This alerts the IT team to take proactive steps, such as migrating data to a larger volume or implementing data compression techniques. In this case, the quick response prevented a potential outage and maintained the availability of critical financial data. It prevented a potential loss of data due to unexpected issues.

In another scenario, consider a software development company that uses a cluster of Linux servers for their build and testing processes. By monitoring the CPU utilization, memory consumption, and network traffic of these servers, the team can identify performance bottlenecks in their build pipelines. If the monitoring dashboards show that a particular server is consistently maxing out its CPU during builds, they can decide to add additional servers to the cluster. With the appropriate infrastructure, developers can see their code compile and tests run quickly. This improves developer productivity, reduces build times, and speeds up the software release cycle.

The use of tools like `sshlog` can provide invaluable insights into security incidents. Consider a scenario where multiple failed login attempts are observed on a critical server. If the server administrator is alerted to such events, they can immediately investigate the source of the attacks. By tracking the IP addresses of the failed login attempts, they can identify the attackers and take immediate actions to block access or strengthen the server's security posture. With these tools, it can be used to prevent future attacks.

In conclusion, the ability to diagnose network issues and maintain peak performance is essential in today's complex and interconnected digital landscape. By continuously tracking critical components, visualizing data effectively, and proactively monitoring Linux systems via SSH, organizations can gain valuable insights into their network's health. The combined effects of real-time insights, and the ability to quickly address any underlying issue, ensure business continuity, safeguard data, and improve the overall user experience. This proactive approach is no longer an option; it is a fundamental requirement for any organization that seeks to thrive in the digital age. The goal is to ensure that networks are stable, safe, and delivering the performance that organizations, their employees, and their customers expect.